Database 101 - Relational databases and introduction to mysql?

this course is taken from “School of SRE” repository

Relational Databases

Prerequisites

- Complete Linux 101 course

What to expect from this course

You will have an understanding of what relational databases are, their advantages, and some MySQL specific concepts.

What is not covered under this course

-

In depth implementation details

-

Advanced topics like normalization, sharding

-

Specific tools for administration

Introduction

The main purpose of database systems is to manage data. This includes storage, adding new data, deleting unused data, updating existing data, retrieving data within a reasonable response time, other maintenance tasks to keep the system running etc.

Pre-reads

Course Contents

- Relational DBs are used for data storage. Even a file can be used to store data, but relational DBs are designed with specific goals:

- Efficiency

- Ease of access and management

- Organized

- Handle relations between data (represented as tables)

- Transaction: a unit of work that can comprise multiple statements, executed together

-

ACID properties

Set of properties that guarantee data integrity of DB transactions

- Atomicity: Each transaction is atomic (succeeds or fails completely)

- Consistency: Transactions only result in valid state (which includes rules, constraints, triggers etc.)

- Isolation: Each transaction is executed independently of others safely within a concurrent system

- Durability: Completed transactions will not be lost due to any later failures

Let’s take some examples to illustrate the above properties.

- Account A has a balance of ₹200 & B has ₹400. Account A is transferring ₹100 to Account B. This transaction has a deduction from sender and an addition into the recipient’s balance. If the first operation passes successfully while the second fails, A’s balance would be ₹100 while B would be having ₹400 instead of ₹500. Atomicity in a DB ensures this partially failed transaction is rolled back.

- If the second operation above fails, it leaves the DB inconsistent (sum of balance of accounts before and after the operation is not the same). Consistency ensures that this does not happen.

- There are three operations, one to calculate interest for A’s account, another to add that to A’s account, then transfer ₹100 from B to A. Without isolation guarantees, concurrent execution of these 3 operations may lead to a different outcome every time.

- What happens if the system crashes before the transactions are written to disk? Durability ensures that the changes are applied correctly during recovery.

- Relational data

- Tables represent relations

- Columns (fields) represent attributes

- Rows are individual records

- Schema describes the structure of DB

-

SQL

A query language to interact with and manage data.

CRUD operations - create, read, update, delete queries

Management operations - create DBs/tables/indexes etc, backup, import/export, users, access controls

Exercise: Classify the below queries into the four types - DDL (definition), DML(manipulation), DCL(control) and TCL(transactions) and explain in detail.

insert, create, drop, delete, update, commit, rollback, truncate, alter, grant, revokeYou can practise these in the below.

Labs are created from the lab section.

-

Constraints

Rules for data that can be stored. Query fails if you violate any of these defined on a table.

Primary key: one or more columns that contain UNIQUE values, and cannot contain NULL values. A table can have only ONE primary key. An index on it is created by default.

Foreign key: links two tables together. Its value(s) match a primary key in a different table \ Not null: Does not allow null values \ Unique: Value of column must be unique across all rows \ Default: Provides a default value for a column if none is specified during insert

Check: Allows only particular values (like Balance >= 0)

-

Most indexes use B+ tree structure.

Why use them: Speeds up queries (in large tables that fetch only a few rows, min/max queries, by eliminating rows from consideration etc)

Types of indexes: unique, primary key, fulltext, secondary

Write-heavy loads, mostly full table scans or accessing large number of rows etc. do not benefit from indexes

-

Allows you to fetch related data from multiple tables, linking them together with some common field. Powerful but also resource-intensive and makes scaling databases difficult. This is the cause of many slow performing queries when run at scale, and the solution is almost always to find ways to reduce the joins.

-

DBs have privileged accounts for admin tasks, and regular accounts for clients. There are finegrained controls on what actions(DDL, DML etc. discussed earlier )are allowed for these accounts.

DB first verifies the user credentials (authentication), and then examines whether this user is permitted to perform the request (authorization) by looking up these information in some internal tables.

Other controls include activity auditing that allows examining the history of actions done by a user, and resource limits which define the number of queries, connections etc. allowed.

Popular databases

Commercial, closed source - Oracle, Microsoft SQL Server, IBM DB2

Open source with optional paid support - MySQL, MariaDB, PostgreSQL

Individuals and small companies have always preferred open source DBs because of the huge cost associated with commercial software.

In recent times, even large organizations have moved away from commercial software to open source alternatives because of the flexibility and cost savings associated with it.

Lack of support is no longer a concern because of the paid support available from the developer and third parties.

MySQL is the most widely used open source DB, and it is widely supported by hosting providers, making it easy for anyone to use. It is part of the popular Linux-Apache-MySQL-PHP (LAMP) stack that became popular in the 2000s. We have many more choices for a programming language, but the rest of that stack is still widely used.

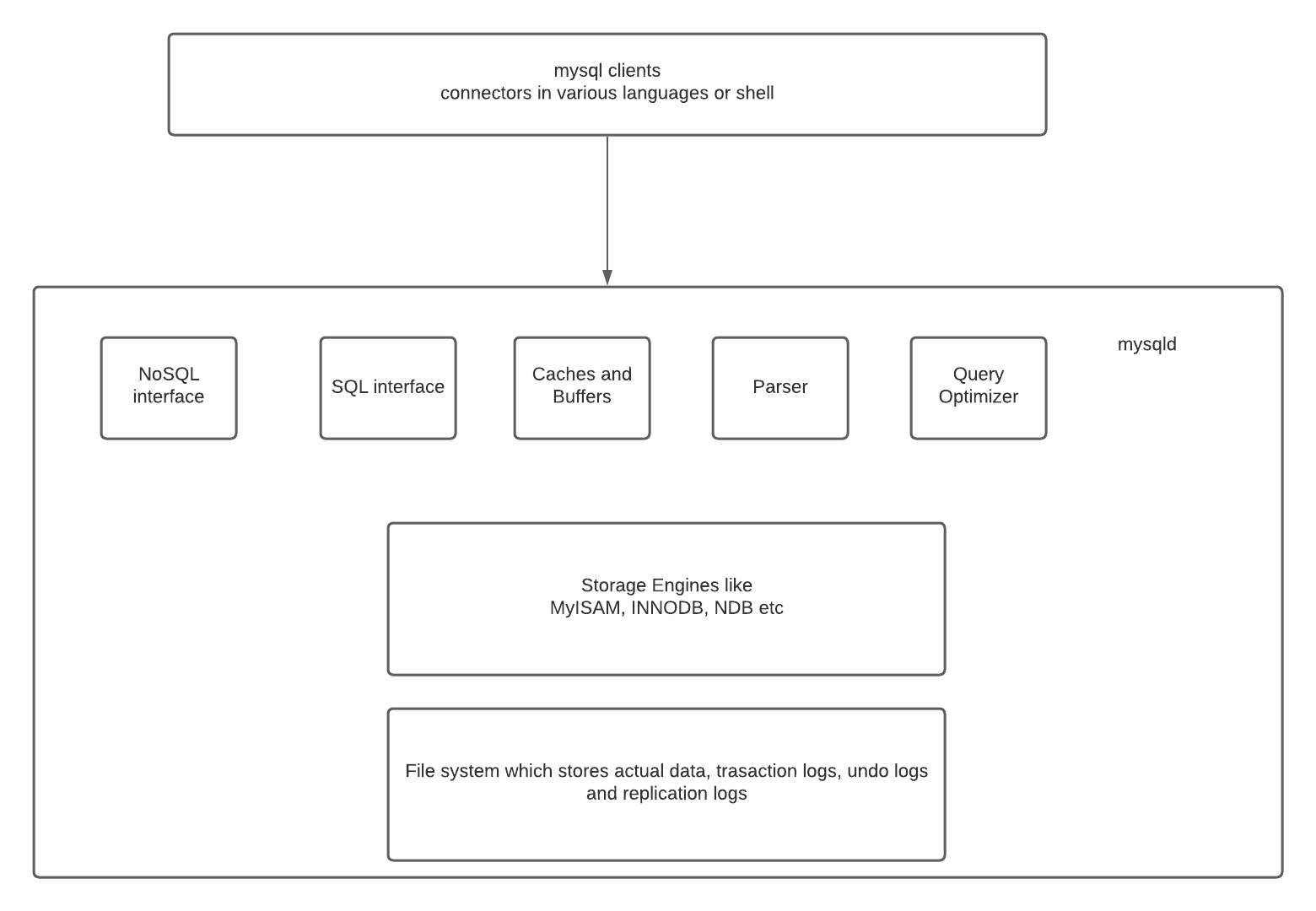

MySQL architecture

MySQL architecture enables you to select the right storage engine for your needs, and abstracts away all implementation details from the end users (application engineers and DBA) who only need to know a consistent stable API.

Application layer:

- Connection handling - each client gets its own connection which is cached for the duration of access)

- Authentication - server checks (username,password,host) info of client and allows/rejects connection

- Security: server determines whether the client has privileges to execute each query (check with show privileges command)

Server layer:

- Services and utilities - backup/restore, replication, cluster etc

- SQL interface - clients run queries for data access and manipulation

- SQL parser - creates a parse tree from the query (lexical/syntactic/semantic analysis and code generation)

- Optimizer - optimizes queries using various algorithms and data available to it(table level stats), modifies queries, order of scanning, indexes to use etc. (check with explain command)

- Caches and buffers - cache stores query results, buffer pool(InnoDB) stores table and index data in LRU fashion

Storage engine options:

- InnoDB: most widely used, transaction support, ACID compliant, supports row-level locking, crash recovery and multi-version concurrency control. Default since MySQL 5.5+.

- MyISAM: fast, does not support transactions, provides table-level locking, great for read-heavy workloads, mostly in web and data warehousing. Default upto MySQL 5.1.

- Archive: optimised for high speed inserts, compresses data as it is inserted, does not support transactions, ideal for storing and retrieving large amounts of seldom referenced historical, archived data

- Memory: tables in memory. Fastest engine, supports table-level locking, does not support transactions, ideal for creating temporary tables or quick lookups, data is lost after a shutdown

- CSV: stores data in CSV files, great for integrating into other applications that use this format

- … etc.

It is possible to migrate from one storage engine to another. But this migration locks tables for all operations and is not online, as it changes the physical layout of the data. It takes a long time and is generally not recommended. Hence, choosing the right storage engine at the beginning is important.

General guideline is to use InnoDB unless you have a specific need for one of the other storage engines.

Running

mysql> SHOW ENGINES;

shows you the supported engines on your MySQL server.

Why should you use this?

General purpose, row level locking, ACID support, transactions, crash recovery and multi-version concurrency control etc.

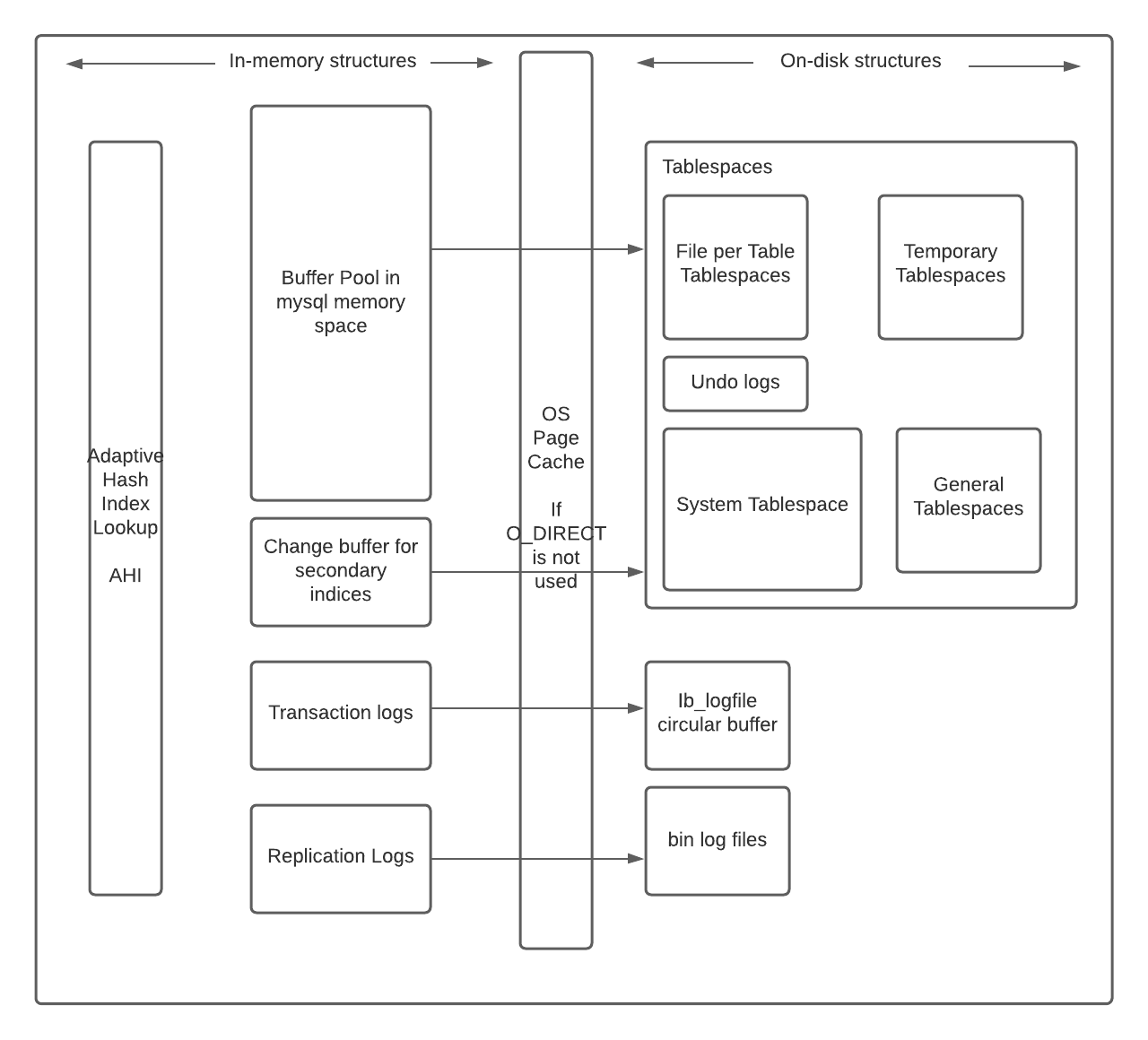

Architecture

Key components:

- Memory:

- Buffer pool: LRU cache of frequently used data(table and index) to be processed directly from memory, which speeds up processing. Important for tuning performance.

- Change buffer: Caches changes to secondary index pages when those pages are not in the buffer pool and merges it when they are fetched. Merging may take a long time and impact live queries. It also takes up part of the buffer pool. Avoids the extra I/O to read secondary indexes in.

- Adaptive hash index: Supplements InnoDB’s B-Tree indexes with fast hash lookup tables like a cache. Slight performance penalty for misses, also adds maintenance overhead of updating it. Hash collisions cause AHI rebuilding for large DBs.

-

Log buffer: Holds log data before flush to disk.

Size of each above memory is configurable, and impacts performance a lot. Requires careful analysis of workload, available resources, benchmarking and tuning for optimal performance.

- Disk:

- Tables: Stores data within rows and columns.

- Indexes: Helps find rows with specific column values quickly, avoids full table scans.

- Redo Logs: all transactions are written to them, and after a crash, the recovery process corrects data written by incomplete transactions and replays any pending ones.

- Undo Logs: Records associated with a single transaction that contains information about how to undo the latest change by a transaction.

Backup and Recovery

Backups are a very crucial part of any database setup. They are generally a copy of the data that can be used to reconstruct the data in case of any major or minor crisis with the database. In general terms backups can be of two types:-

- Physical Backup - the data directory as it is on the disk

- Logical Backup - the table structure and records in it

Both the above kinds of backups are supported by MySQL with different tools. It is the job of the SRE to identify which should be used when.

Mysqldump

This utility is available with MySQL installation. It helps in getting the logical backup of the database. It outputs a set of SQL statements to reconstruct the data. It is not recommended to use mysqldump for large tables as it might take a lot of time and the file size will be huge. However, for small tables it is the best and the quickest option.

mysqldump [options] > dump_output.sql

There are certain options that can be used with mysqldump to get an appropriate dump of the database.

To dump all the databases

mysqldump -u<user> -p<pwd> --all-databases > all_dbs.sql

To dump specific databases

mysqldump -u<user> -p<pwd> --databases db1 db2 db3 > dbs.sql

To dump a single database

mysqldump -u<user> -p<pwd> --databases db1 > db1.sql

OR

mysqldump -u<user> -p<pwd> db1 > db1.sql

The difference between the above two commands is that the latter one does not contain the CREATE DATABASE command in the backup output.

To dump specific tables in a database

mysqldump -u<user> -p<pwd> db1 table1 table2 > db1_tables.sql

To dump only table structures and no data

mysqldump -u<user> -p<pwd> --no-data db1 > db1_structure.sql

To dump only table data and no CREATE statements

mysqldump -u<user> -p<pwd> --no-create-info db1 > db1_data.sql

To dump only specific records from a table

mysqldump -u<user> -p<pwd> --no-create-info db1 table1 --where=”salary>80000” > db1_table1_80000.sql

Mysqldump can also provide output in CSV, other delimited text or XML format to support use-cases if any. The backup from mysqldump utility is offline i.e. when the backup finishes it will not have the changes to the database which were made when the backup was going on. For example, if the backup started at 3 PM and finished at 4 PM, it will not have the changes made to the database between 3 and 4 PM.

Restoring from mysqldump can be done in the following two ways:-

From shell

mysql -u<user> -p<pwd> < all_dbs.sql

OR

From shell if the database is already created

mysql -u<user> -p<pwd> db1 < db1.sql

From within MySQL shell

mysql> source all_dbs.sql

Percona Xtrabackup

This utility is installed separately from the MySQL server and is open source, provided by Percona. It helps in getting the full or partial physical backup of the database. It provides online backup of the database i.e. it will have the changes made to the database when the backup was going on as explained at the end of the previous section.

- Full Backup - the complete backup of the database.

- Partial Backup - Incremental

- Cumulative - After one full backup, the next backups will have changes post the full backup. For example, we took a full backup on Sunday, from Monday onwards every backup will have changes after Sunday; so, Tuesday’s backup will have Monday’s changes as well, Wednesday’s backup will have changes of Monday and Tuesday as well and so on.

- Differential - After one full backup, the next backups will have changes post the previous incremental backup. For example, we took a full backup on Sunday, Monday will have changes done after Sunday, Tuesday will have changes done after Monday, and so on.

Percona xtrabackup allows us to get both full and incremental backups as we desire. However, incremental backups take less space than a full backup (if taken per day) but the restore time of incremental backups is more than that of full backups.

Creating a full backup

xtrabackup --defaults-file=<location to my.cnf> --user=<mysql user> --password=<mysql password> --backup --target-dir=<location of target directory>

Example

xtrabackup --defaults-file=/etc/my.cnf --user=some_user --password=XXXX --backup --target-dir=/mnt/data/backup/

Some other options

--stream- can be used to stream the backup files to standard output in a specified format. xbstream is the only option for now.--tmp-dir- set this to a tmp directory to be used for temporary files while taking backups.--parallel- set this to the number of threads that can be used to parallely copy data files to target directory.--compress- by default - quicklz is used. Set this to have the backup in compressed format. Each file is a .qp compressed file and can be extracted by qpress file archiver.--decompress- decompresses all the files which were compressed with the .qp extension. It will not delete the .qp files after decompression. To do that, use--remove-originalalong with this. Please note that the decompress option should be run separately from the xtrabackup command that used the compress option.

Preparing a backup

Once the backup is done with the –backup option, we need to prepare it in order to restore it. This is done to make the datafiles consistent with point-in-time. There might have been some transactions going on while the backup was being executed and those have changed the data files. When we prepare a backup, all those transactions are applied to the data files.

xtrabackup --prepare --target-dir=<where backup is taken>

Example

xtrabackup --prepare --target-dir=/mnt/data/backup/

It is not recommended to halt a process which is preparing the backup as that might cause data file corruption and backup cannot be used further. The backup will have to be taken again.

Restoring a Full Backup

To restore the backup which is created and prepared from above commands, just copy everything from the backup target-dir to the data-dir of MySQL server, change the ownership of all files to mysql user (the linux user used by MySQL server) and start mysql.

Or the below command can be used as well,

xtrabackup --defaults-file=/etc/my.cnf --copy-back --target-dir=/mnt/data/backups/

Note - the backup has to be prepared in order to restore it.

Creating Incremental backups

Percona Xtrabackup helps create incremental backups, i.e only the changes can be backed up since the last backup. Every InnoDB page contains a log sequence number or LSN that is also mentioned as one of the last lines of backup and prepare commands.

xtrabackup: Transaction log of lsn <LSN> to <LSN> was copied.

OR

InnoDB: Shutdown completed; log sequence number <LSN>

<timestamp> completed OK!

This indicates that the backup has been taken till the log sequence number mentioned. This is a key information in understanding incremental backups and working towards automating one. Incremental backups do not compare data files for changes, instead, they go through the InnoDB pages and compare their LSN to the last backup’s LSN. So, without one full backup, the incremental backups are useless.

The xtrabackup command creates a xtrabackup_checkpoint file which has the information about the LSN of the backup. Below are the key contents of the file:-

backup_type = full-backuped | incremental

from_lsn = 0 (full backup) | to_lsn of last backup <LSN>

to_lsn = <LSN>

last_lsn = <LSN>

There is a difference between to_lsn and last_lsn. When the last_lsn is more than to_lsn that means there are transactions that ran while we took the backup and are yet to be applied. That is what –prepare is used for.

To take incremental backups, first, we require one full backup.

xtrabackup --defaults-file=/etc/my.cnf --user=some_user --password=XXXX --backup --target-dir=/mnt/data/backup/full/

Let’s assume the contents of the xtrabackup_checkpoint file to be as follows.

backup_type = full-backuped

from_lsn = 0

to_lsn = 1000

last_lsn = 1000

Now that we have one full backup, we can have an incremental backup that takes the changes. We will go with differential incremental backups.

xtrabackup --defaults-file=/etc/my.cnf --user=some_user --password=XXXX --backup --target-dir=/mnt/data/backup/incr1/ --incremental-basedir=/mnt/data/backup/full/

There are delta files created in the incr1 directory like, ibdata1.delta, db1/tbl1.ibd.delta with the changes from the full directory. The xtrabackup_checkpoint file will thus have the following contents.

backup_type = incremental

from_lsn = 1000

to_lsn = 1500

last_lsn = 1500

Hence, the from_lsn here is equal to the to_lsn of the last backup or the basedir provided for the incremental backups. For the next incremental backup we can use this incremental backup as the basedir.

xtrabackup --defaults-file=/etc/my.cnf --user=some_user --password=XXXX --backup --target-dir=/mnt/data/backup/incr2/ --incremental-basedir=/mnt/data/backup/incr1/

The xtrabackup_checkpoint file will thus have the following contents.

backup_type = incremental

from_lsn = 1500

to_lsn = 2000

last_lsn = 2200

Preparing Incremental backups

Preparing incremental backups is not the same as preparing a full backup. When prepare runs, two operations are performed - committed transactions are applied on the data files and uncommitted transactions are rolled back. While preparing incremental backups, we have to skip rollback of uncommitted transactions as it is likely that they might get committed in the next incremental backup. If we rollback uncommitted transactions the further incremental backups cannot be applied.

We use –apply-log-only option along with –prepare to avoid the rollback phase.

From the last section, we had the following directories with complete backup

/mnt/data/backup/full

/mnt/data/backup/incr1

/mnt/data/backup/incr2

First, we prepare the full backup, but only with the –apply-log-only option.

xtrabackup --prepare --apply-log-only --target-dir=/mnt/data/backup/full

The output of the command will contain the following at the end.

InnoDB: Shutdown complete; log sequence number 1000

<timestamp> Completed OK!

Note the LSN mentioned at the end is the same as the to_lsn from the xtrabackup_checkpoint created for full backup.

Next, we apply the changes from the first incremental backup to the full backup.

xtrabackup --prepare --apply-log-only --target-dir=/mnt/data/backup/full --incremental-dir=/mnt/data/backup/incr1

This applies the delta files in the incremental directory to the full backup directory. It rolls the data files in the full backup directory forward to the time of incremental backup and applies the redo logs as usual.

Lastly, we apply the last incremental backup same as the previous one with just a small change.

xtrabackup --prepare --target-dir=/mnt/data/backup/full --incremental-dir=/mnt/data/backup/incr1

We do not have to use the –apply-log-only option with it. It applies the incr2 delta files to the full backup data files taking them forward, applies redo logs on them and finally rollbacks the uncommitted transactions to produce the final result. The data now present in the full backup directory can now be used to restore.

Note - To create cumulative incremental backups, the incremental-basedir should always be the full backup directory for every incremental backup. While preparing, we can start with the full backup with the –apply-log-only option and use just the last incremental backup for the final –prepare as that has all the changes since the full backup.

Restoring Incremental backups

Once all the above steps are completed, restoring is the same as done for a full backup.

LAB

Prepare the LAB database and populate to the mysql database

First create the environment

PREPARE To create a Mysql database please run the fallowing commands (you can just click below section)

docker run --rm --name mysql-db -p 3306:3306 -e MYSQL_ROOT_PASSWORD=mypassword -d mysql

Populate sample data for the LAB

following will download some sample data and create the database

git clone https://github.com/datacharmer/test_db.git

docker cp test_db mysql-db:/home

docker exec -it mysql-db bash

cd /home/test_db/

mysql -uroot -pmypassword < /home/test_db/employees.sql

mysql -uroot -pmypassword

now you are inside the MYSQL database inside the container. You also have a database prepared for you “EMPLOYEES”

Basic Commands

SHOW DATABASES;

This comand will show you the existing databases on the MYsql database.

USE employees;

This command will switch you to the employees Database

SHOW TABLES;

This command will show you the tables inside the employees Database

Now we will write SQL queries, on most of the databases these commands are the same.

SELECT * FROM employees LIMIT 5;

Show the contents of the employees table of database employees and limit the output to 5 lines

SELECT COUNT(*) FROM employees WHERE gender = 'M' LIMIT 5;

(FILTER) .. Limit the provious output where the gender is ‘M’ only and count the result

SELECT COUNT(*) FROM employees WHERE first_name = 'Sachin';

(FILTER) .. Only show the number of users whose first_name is Sachin

Optimization, Analyze and Explain tables

Use explain and explain analyze to profile a query, identify and add indexes required for improving performance

SHOW INDEX FROM employees FROM employees\G

View all indexes on table (\G is to output horizontally, replace it with a ; to get table output)

EXPLAIN SELECT * FROM employees WHERE emp_no < 10005\G

This query uses an index, identified by ‘key’ field by prefixing explain keyword to the command, we get query plan (including key used)

EXPLAIN SELECT first_name, last_name FROM employees WHERE first_name = 'Sachin'\G

Compare that to the query which does not utilize any index

EXPLAIN ANALYZE SELECT first_name, last_name FROM employees WHERE first_name = 'Sachin'\G

Above query shows how much time this query takes

Now we will create an index, please check how the analyze results will be affected

CREATE INDEX idx_firstname ON employees(first_name);

EXPLAIN ANALYZE SELECT first_name, last_name FROM employees WHERE first_name = 'Sachin';

Actual time=0.551ms for first row, 2.934ms for all rows. A huge improvement!, Also notice that the query involves only an index lookup, and no table scan (reading all rows of the table), which vastly reduces load on the DB.

Please feel free to use the environment to understand how things work inside databases.

Further Reading

- MySQL Point-In-Time-Recovery

- Another MySQL backup tool - mysqlpump

- Another MySQL backup tool - mydumper

- A comparison between mysqldump, mysqlpump and mydumper

- Backup Best Practices

Short quiz to repeat the topic

After selecting the correct options, press the Gonder button. You can see the results true and in false colours.

CRUD is the short of?

- ( ) Collect Request Update Destroy

- (x) Create Read Update Delete

- ( ) Create Repeat Update Destroy

- ( ) Come and Request Update Data

Which one is wrong for ACID properties?

- ( ) Transactions only result in valid state(Consistent)

- ( ) Each transaction is atomic

- (x) Each transaction can be Destroyed independently

- ( ) Each transaction is executed independently than others

what is the result for SELECT FROM employees WHERE first_name = ‘Sachin’;?

- ( ) This query will show the number of users whose name is ‘Sachin’

- ( ) This query will show the users whose name is ‘Sachin’, result will only show users

- (x) This query will show the users whose name is ‘Sachin’, result will show all fields

- ( ) This query will give empty result

What is the result of the following SELECT CREATE INDEX idx_firstname ON employees(first_name); ?

- ( ) Create index with the name idx_firstname on employees table’s first_name fields.

- (x) error

- ( ) Show the result for the name idx_firstname on employees table’s first_name fields.

- ( ) Analyse the INDEX creation for the table employees and field first_name